Trending Article

Meta has launched an AI-Powered “Adult Classifier” aimed at protecting underage users on Instagram

Meta, the parent company of Instagram and Facebook, is making its efforts toward keeping young users safe from social media-related violence and other interesting areas. The introduction of an AI-powered “adult classifier” represents a recent addition to a slew of updates that the company has provided to improve account security in the wake of threats to online safety-from exposure to certain harmful materials to interaction with odd strangers.

So, among other things, why is Meta focused on the protection of teens?

With the advent of the rising generation of digital platforms comes the responsibility of accounting for protecting numbers of underage audiences. Over these years, the SAGs have expressed an outpouring of concern over the conduct of teenage users on the platforms of Meta. Last year, the company of a confluence displaced a lawsuit with 33 state attorneys general across the U.S. They alleged that Meta continues to purposely design features that addict minors in ways that can have pernicious implications for their mental and physical health. Meta’s efforts to kill the case were unsuccessful, ultimately causing the company to spend more time and resources on enhancing safety measures for this class of users.

In return, several important features that help in account lockdown and limited exposure of teens to potentially harmful content have been empowered. These include making accounts for underage users “Teen Accounts,” with greater privacy and safety settings and restricting DMs from strangers to minimize the possibilities of dangerous interactions.

Meta unveils AI tools to give robots a human touch

The AI-Powered “Adult Classifier” Tool

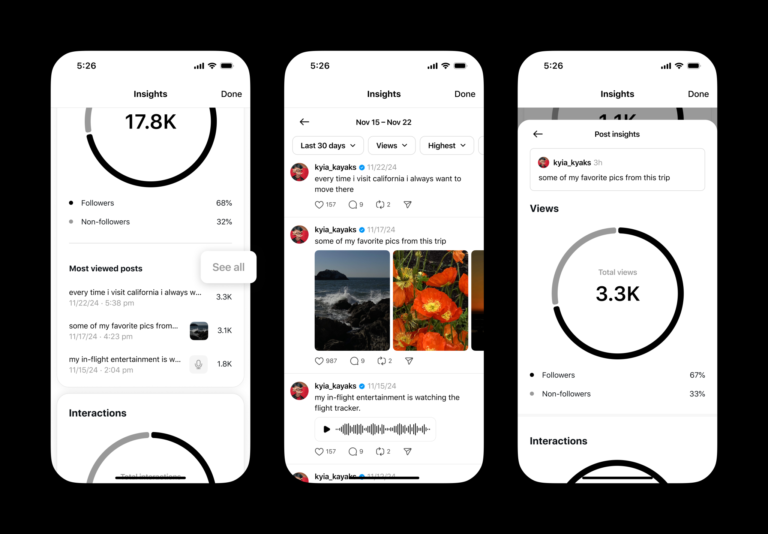

The latest step in Meta’s strategy is the introduction of an AI tool designed to detect and flag underage users. The “adult classifier” AI will automatically scan user profiles, analyzing data points such as followers, types of content they engage with, and even birthday posts shared by friends. The tool uses these indicators to identify discrepancies, such as when someone who claims to be an adult is interacting with content typically associated with younger users or vice versa.

When the AI tool detects that a user may have lied about their age, it automatically reassigns their account to the restricted “Teen Account” category. This switch ensures that the user’s profile is subjected to tighter controls, which include content restrictions and privacy settings designed to limit exposure to inappropriate or potentially harmful content.

Users who are moved to a teen account because of the AI tool will be placed into one of two age groups: those who are 16 or 17 years old, and those who are under 16. Users in the first category—16 and 17-year-olds—will have some flexibility to adjust their privacy settings, but they will still face restrictions on the type of content they can interact with and who can message them. For users under 16, however, changes to account settings will require parental approval, ensuring that parents or guardians have control over how their child engages with the platform.

Meta Orion AR glasses hand free interface with AI

The Process of Age Verification

While Meta’s AI tool is designed to catch underage users who may be falsifying their age, the company also provides a process for users who wish to dispute the AI’s decision. If a user believes that the tool has misclassified them, they can appeal the decision. This could involve submitting proof of their actual age through government-issued ID or by using video selfies via a partner service, Yoti. This is an important step for ensuring that the system doesn’t wrongfully categorize legitimate adult users as minors, as it allows for human verification.

Meta had previously used a system in which users could vouch for the ages of their friends, but this method has been discontinued in favor of a more reliable, data-driven approach. The company has not disclosed the accuracy of its AI tool, but it is clear that the move to verify underage users is part of a broader effort to bolster safety features on its platform.

Teen Accounts: More Restrictions, More Safety

Once the “adult classifier” tool determines that a user is under 18, their account will be automatically moved to a “Teen Account,” which comes with a range of security measures specifically designed to protect minors. For example, teen accounts have limits on who can message them. Direct messages from strangers, particularly those from individuals who are not following the teen, will be blocked by default.

In addition, teens will also see more prominent reminders to manage their privacy settings. For instance, Instagram will encourage younger users to review who can see their posts and how they interact with the platform. The goal is to make it easier for young people to control who can access their information and to limit their exposure to potentially harmful content, including bullying, explicit material, and predatory behavior.

Another important feature of Teen Accounts is content moderation. The algorithm used by Instagram will be stricter for accounts under the age of 18, filtering out content that may not be appropriate for younger audiences. For example, content that promotes substance abuse, hate speech, or explicit images will be more tightly restricted for teen users.

What Happens When the AI Misclassifies a User?

One key concern surrounding this new system is the accuracy of the AI tool. As Meta has not disclosed specific details about how the AI works or its precision, there’s a potential risk of false positives, where legitimate adult users are misclassified as underage. In such cases, users can appeal by submitting proof of their age through a government-issued ID or a video selfie. This option helps mitigate the risk of false identification, but the process still introduces a potential barrier for users who may not be familiar with the process or have difficulty accessing the necessary documentation.

While the introduction of the adult classifier AI is a significant move toward improving user safety, it’s not without its challenges. The tool needs to strike a balance between protecting young users and ensuring that adults are not wrongly restricted. Moreover, Meta will need to continue to refine the AI’s accuracy to avoid overreliance on automated systems that may inadvertently alienate legitimate users.

The Legal and Ethical Implications

The introduction of AI tools to detect and restrict underage users also raises important questions about privacy and the ethical use of technology. While the initiative is clearly aimed at protecting children, it also introduces a level of surveillance that some critics may find concerning. Tracking users’ behavior—such as the types of content they engage with and birthday posts—could be seen as an invasion of privacy, especially if the tool is not entirely transparent in how it makes its determinations.

Meta is likely to face more scrutiny over the use of this AI technology in the coming months. The company will need to balance its safety measures with respect for user privacy, ensuring that the data used for age verification and classification is handled responsibly. Additionally, as AI technology evolves, so too will the legal and ethical frameworks governing its use, and Meta may find itself at the center of debates about AI regulation and the protection of minors online

Pingback: Bell Canada To Acquire Ziply Fiber For $3.6 Billion