Trending post

Amazon is preparing a new generation of artificial intelligence chips as part of its broader efforts to reduce reliance on Nvidia, the market leader in AI processor business lines, and to optimize operational costs for the company itself and its customers on both sides of the Amazon Web Services equation.

The move is a big part of the investments that the company is making in semiconductor technology that is an integral component of the firm’s continuing quest to transform the data center infrastructure and powering the rising demand for AI services.

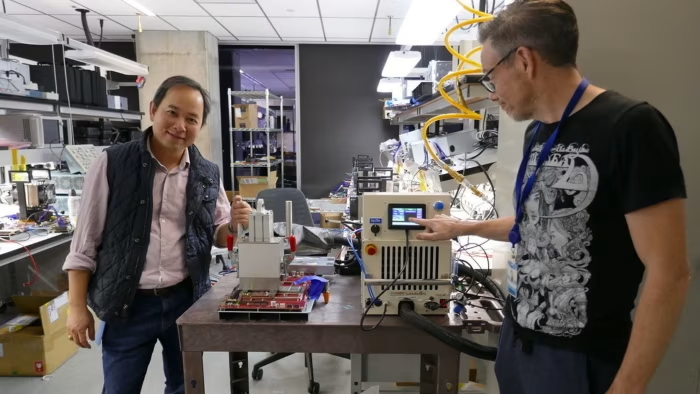

The venture is led by Annapurna Labs, an Israeli chip start-up acquired by Amazon for $350 million in 2015. Annapurna has designed a series of custom chips for AWS since its acquisition in an effort to optimize cloud computing for AI workloads. These include Trainium 2, the latest generation of Amazon’s AI-centric chips that will be next in line for presentation, probably next month. Trainium 2 is one piece of a larger bet by Amazon to break into the dominance by Nvidia in the AI chip market.

Amazon’s Ambition: Taking on Nvidia

Amazon’s play to architect its own AI chips fits into its broader vision of building much more integrated, vertically aligned infrastructure that decreases its reliance on third-party vendors like Nvidia. Its ambition is to make AWS not only the easiest place to run Nvidia chips but also a strong alternative with custom-designed silicon.

Now, according to Dave Brown, Vice President of AWS Compute and Networking Services, the company would want to have alternatives in the market. Even as it commits to supporting Nvidia’s GPUs, Amazon sees value in having its own chip solutions in order to provide efficiency gains as well as savings to the customers. The company has already claimed that its Inferentia chips, which are tailored for inference tasks, can already deliver 40% cost savings compared to running similar workloads on Nvidia’s hardware.

Some of the companies using the design-specific chips are Anthropic—an AI startup and competitor to OpenAI—has, indeed, begun testing Trainium 2. Databricks, Deutsche Telekom, and Ricoh are also testing Amazon’s AI chip in AWS’s continuing expansion into the AI space.

Increasing Need for AI Infrastructure

The demand for AI services remains high, especially in cloud computing, where providers such as Amazon, Microsoft, and Google have invested billions in the development of AI infrastructure. This would boost Amazon’s capital expenditures to around $75 billion in 2024, significantly higher than $48.4 billion in 2023. Thus, it speaks to the growing importance of AI infrastructure and custom chip development in the long-term strategy of the company.

Amazon’s cloud services are expanding at a torrid pace, and custom chips are playing a huge part in Amazon’s efforts to maintain its dominance within the very competitive AI market. While AWS remains Nvidia’s biggest customer, the company is also working on an alternative solution, which has the potential to empower customers with more choices for optimizing AI workloads at scale.

Beyond AI Chips: Innovation

It’s more about competition with Nvidia, though. Indeed it’s about efficiency and cost-effectiveness in the cloud computing experience. The Annapurna Labs, Amazon has gained important ground with Graviton, an ARM-based central processing unit aimed at offering low power consumption compared with regular Intel and AMD central processing units in data centers.

While Nvidia’s GPUs are general-purpose processors, powerful, Amazon’s chips are optimized for particular AI tasks and tend to consume lesser power and save efficiency in the data centers of the company. The advantage of Amazon’s chip is that it optimizes for particular workloads thus promoting specific applications. As G. Dan Hutcheson, tech analyst for TechInsights, so astutely remarks, the chips Amazon developed “can mean tens of millions of dollars of savings.. . because of power, which compounds in large data centers”.

The aim is more than just the sheer production of powerful chips; instead, an integrated infrastructure focusing on custom-designed hardware, proprietary software, and optimized server architectures promises general efficiency in data centers at AWS. An integrated approach entails huge cost savings, faster processing, and greater control over cloud resources.

Challenges to Nvidia’s Dominance

All this notwithstanding, there is much Amazon still needs to do in order to reclaim the position of Nvidia in the AI chip market. After all, Nvidia takes the top position with its GPUs dominating almost all the demand for most AI workloads in cloud data centers. During its second fiscal quarter of 2024, NVIDIA generated $26.3 billion in AI data center chip sales-an amount similarly generated by AWS for the same period.

While Amazon is confident about how much better its chips work-there is a fourfold performance difference between Trainium 1 and Trainium 2, it refuses to comment on how this stacks up against Nvidia’s. The company hasn’t submitted these chips for independent benchmarks yet. Analysts believe the real test will come when these chips are deployed in big fleets of servers.

Benchmarks help customers decide if a chip is something they should consider, said Patrick Moorhead, analyst of chips with Moor Insights & Strategy. “The real test, however is how the chips perform in mass deployment.” In this regard, success for Amazon’s AI chips will rely on whether it scales and can go on to meet the needs of major cloud customers who require flexibility, performance, and expense efficiency.

A Future Beyond Nvidia: Diversifying the Chip Ecosystem

While Nvidia dominates the AI chip market, many companies, including Amazon, believe that the market needs more players to make it interesting. “People like all the innovations that Nvidia brought, but nobody feels comfortable with the situation when Nvidia has 90% of the market share,” Moorhead said. “This can’t last too long.”.

As demand for AI grows with increasingly rapid acceleration, choices for AI infrastructure will become more significant. Investment in custom chips will position Amazon to both innovate and find alternative solutions rather than rely on Nvidia alone, ensuring AWS stays an option for customers trying to avoid the dominant AI chip provider.

Amazon’s strategy, in fact, represents the larger phenomenon visible in the tech sector, where giant players seem to be increasingly designing hardware to complement their cloud and AI platforms. The shift toward custom silicon will be written into the future of cloud computing, where companies like Amazon gain more control over their infrastructure and cost structures as they compete in this hyper-growth AI market.

source: ft